Diverse architectural patterns and extensive organizational access to data has made data movement par for the course in today's cloud environments. As you'd imagine, unmanaged data flows can result in compliance challenges, as well as limited visibility over potential security breaches. To successfully monitor data movement, security teams must establish a baseline for data locations, detect suspicious activities and prioritize incidents involving sensitive or compliance-related data.

In this post, we look at cloud data flow and the risks associated with the transfer, replication or ingestion of data between cloud datastores, such as managed databases, object storage and virtual machines.

How Sensitive Data Travels Through Cloud Environments

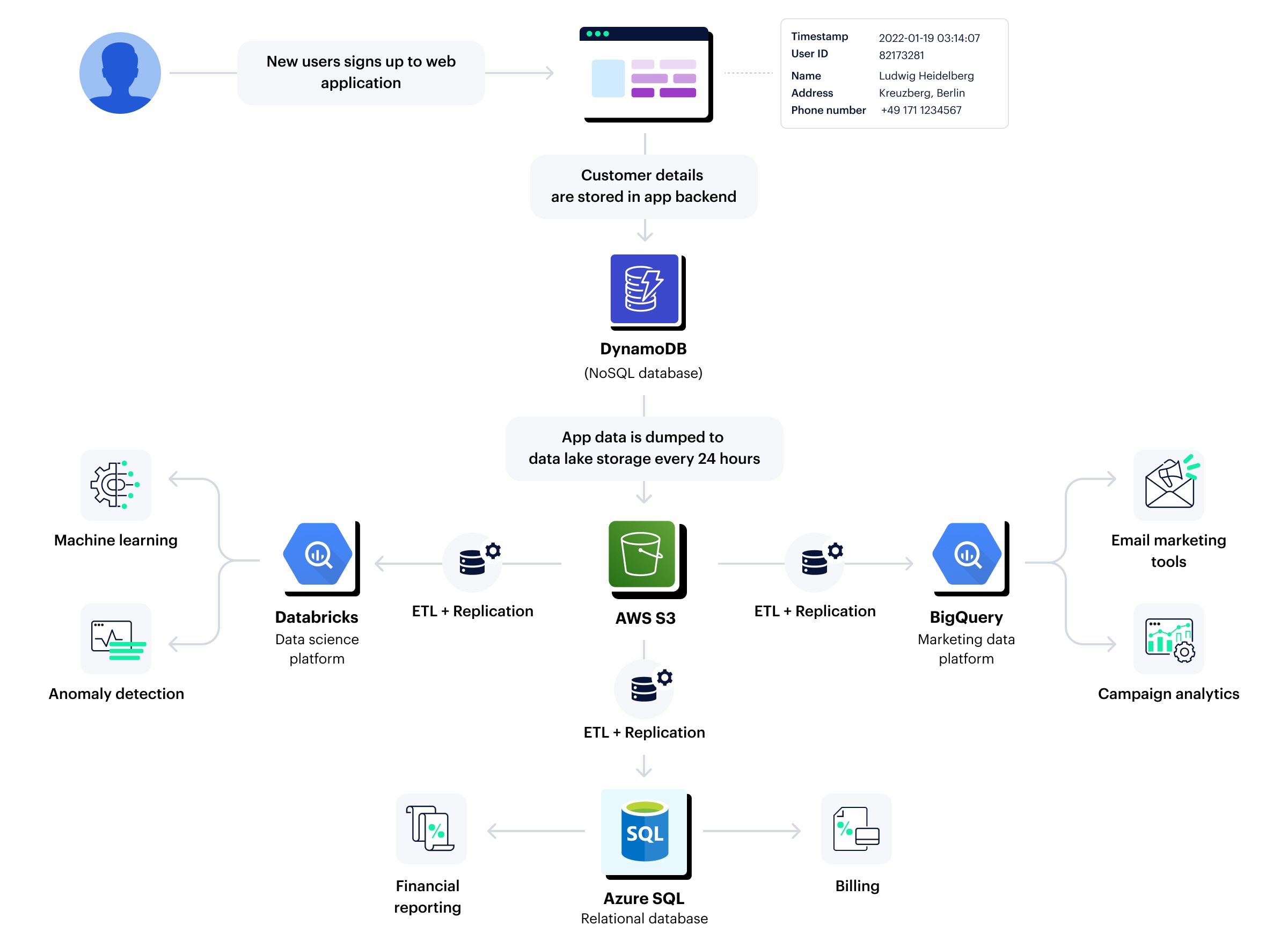

Let’s imagine how a single PII-containing CRM record can travel and consider the security implications.

When a user signs up for a web app and submits their PII to create an account, the data is instantly stored in the app's DynamoDB backend. Every 24 hours, the app data is transferred to AWS S3. To accommodate various analytical use cases, such as RevOps, data science and financial reporting, the customer's data is replicated across three data platforms. As a result, within a single day, the same PII record can be found in a minimum of five locations, often having undergone multiple transformations and enrichments.

The simplified example depicted in Figure 1 doesn’t account for staging and testing environments, unmanaged databases or shadow data. Still, you can see how the same record exists in five datastores, is processed by applications potentially running in different regions and — depending on the architecture in place — might exist on more than one public cloud.

Security Concerns Related to Cloud Data Flows

1. Complying with Data Residency and Sovereignty Gets Complicated

In the Figure 1 example, the PII belonged to Mr. Heidelberg of Berlin, Germany, and is likely subject to the General Data Protection Regulation (GDPR). To comply with the data sovereignty requirements, the data must reside in servers located in the European Union (or countries with similar data protection laws).

It’s quite likely that the cloud account spans multiple regions, including non-compliant regions. If the data is moved or duplicated across different datastores and cloud regions, it can become difficult to track and ensure that the data is stored in the right region.

To prevent compliance issues, the organization needs to identify PII across its cloud environments, as well as connected records. For example, the CRM record might have been joined with additional data, such as support tickets or marketing interactions, which will also need to be accounted for.

2. Sensitive Data Travels Between Environments Instead of Staying Segregated

Keeping sensitive data separate and under stricter access control policies is a basic data security best practice and a matter of compliance with regulations like ISO 27002:2022. This requirement includes separate development, test and production environments.

For convenience or to run more realistic tests, developers commonly download data onto their machines or move it between environments for testing. When sensitive data is moved, however, it can put the organization at risk.

The organization needs to understand data flows between environments, identify when segregation requirements are being ignored and learn whether unauthorized principals are getting access to it along the way.

3. Security Incidents Can Fly Under the Radar

When nonstop data movement is considered business-as-usual, it’s easy for a data breach to go unnoticed. An organization that works with multiple vendors might not bat an eye when an external account accesses a sensitive database, but bad actors can exploit this type of nonchalance. You’ll find countless examples of how unsecure access to resources has led to data breach incidents, as in the Capita incident from May 2023.

Mapping data flows helps to identify the flows that should trigger an immediate response from SOC teams. If they see data going to an external account, they need to know immediately whether this is a vendor and whether the vendor has the right permissions to access the dataset.

Considerations for Monitoring Data Movement in Public Clouds

While data movement poses risks, it’s an inherent part of the way organizations leverage the public cloud. Similar to dealing with shadow data, security policies and solutions need to balance business agility and data breach prevention.

Data Breach Prevention Guidelines

Setting a Baseline

Effective monitoring requires that the security organization has a baseline of where data is meant to reside (physically or logically). It also requires the ability to identify deviations and prioritize the incidents that pose a risk — usually incidents that involve sensitive data flowing into unauthorized or unmonitored datastores.

Look at the Past, Present and Future of Your Data

In some cases, you’ll be able to prevent the policy violation before it happens. In other cases, your monitoring should lead to further analysis and forensic investigation. Monitoring should encompass:

- The past: Where was the data created? Where does it belong? Are there additional versions of this data?

- The present: Where is the data now? How did it get there? Does it belong in this datastore, or should it be elsewhere? Is it moving where it shouldn't?

- The future: Is there an opportunity for the data to move where it shouldn't? Can we prevent wrong data movement if it happens?

Data Context and Content Matters

Not all data is created equal. Classifying your data and creating an inventory of sensitive assets should help you prioritize risks. For each data asset, you should be able to answer:

- Does this data have locality or sovereignty characteristics (e.g., data related to EU or California residents)?

- Does your data have other compliance-related characteristics (e.g., electronic health records)?

- Are the same sensitive records stored in multiple datastores?

- Which security policy does the data fall under?

Make Data Movement Transparent for Security Teams

Once you’ve identified and prioritized your sensitive data assets, you want to provide visibility into this data in the event that it moves between regions, between environments, or to external consumers, such as vendors or service providers.

Develop the Ability to Identify When an Incident Has Occurred

Your monitoring solution shouldn’t end with reducing static risk to data. Monitoring data flows in real time should alert you to high-risk incidents, such as:

- Sensitive data moving between development and production environments

- A configuration change creating an opportunity for unauthorized access to the data

- Data moving into non-compliant storage locations (e.g., EU resident data moving outside the EU)

- Unusually large volumes of data being downloaded or locally copied

Steps to Reduce Data Flow Risk

Of course, monitoring is just the first step. Once you have a clear picture of your cloud data flows, you can design an effective data security strategy that protects data at rest and in motion.

Tips to Minimize Risks of Data Leakage

- Reduce the attack surface: Remove duplicate records and review data retention policies to prevent your organization’s data footprint from growing unnecessarily large.

- Enforce compliance: Use automated controls to monitor and identify data movement that causes non-compliance with GDPR, PCI DSS or any other regulation.

- Notify owners: Whenever an incident is detected, have an automated process in place to alert the data owners (and other stakeholders, if needed).

- Trigger workflows to fix compliance and security issues: Automate processes to address policy violations as soon as they occur.

- Enforce data hygiene: Periodically review your data assets and associated permissions to prevent data sprawl and shadow data.

Learn More

Data flow raises significant security and compliance challenges. Static and dynamic data monitoring with Prisma Cloud, however, gives organizations a comprehensive view of their sensitive data’s movement, allowing for near real-time alerts on potential security breaches and enabling proactive risk mitigation. To learn more about securing your data landscape with DSPM and DDR, download our definitive guide today.

And if you haven’t tried Prisma Cloud, we’d love for you to experience the advantage with a free 30-day trial.