In recent years, web application owners have faced a dramatic increase in automated web bots such as web scrapers, account takeover tools, scalpers, credit card stuffers, automated attack tools and more. Detecting, classifying and managing the different bot types is becoming increasingly hard as bot technologies become sophisticated and harder to pinpoint. Moreover, bots are not always harmful – some bots such as search engines should always be allowed to traverse your web applications in order to properly index and rank it.

Palo Alto Networks recently launched our cloud native application bot protection capabilities as part of the new Web Application and API Security (WAAS) module. The new bot protection capabilities include best-of-breed bot detection and classification, allowing customers to manage bot risks with granular controls and visibility.

What Are Bots?

A bot is a piece of software or a script that executes automated tasks over the web. Bots often mimic human website interaction and can be deployed to conduct tasks at speed and scale. Here are a few examples of popular web bots:

- Search engine index bots

- Website SEO analyzer bots

- Feed fetching bots

Bots can be used in very diverse ways. Naturally, there are “helpful” or “good” bots, such as those above, and there are “malicious” bots, such as account takeover bots, automated DoS tools, and so forth.

Since application owners would like to allow good bot traffic to their site while preventing potential damage caused by malicious bots, deploying an intelligent bot risk management solution is critical.

Detecting Bots: From A to Z

Some bots, especially those with good or helpful intentions, will usually identify themselves using the HTTP ‘User-Agent’ header. For example, one of Google’s search engine bots will provide the following User-Agent header value:

Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)

Or Microsoft’s Bing search engine:

Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)

Other automation frameworks and HTTP libraries also use User-Agent strings, which, by default, will include identifiers regarding the framework's name and version. Some common examples:

go-http-client/1.1

python-requests/2.22.0

masscan/1.0 (https://github.com/robertdavidgraham/masscan)

curl/7.54.0

Common Bot Evasions

First, it should be noted that, while a web client that identifies itself as a known bot is probably not a common web user, it still shouldn’t be trusted blindly since anyone can spoof the User-Agent header value. Bot operators employ various evasion techniques to avoid getting spotted. For example:

- Browser impersonation: Bot operators manipulate the User-Agent or HTTP request structure to mimic those used by common web browsers such as Chrome, Firefox, Safari, etc.

- Known good-bot impersonation: Oftentimes, web applications take caution not to block known good bots such as search engine crawlers that determine a site’s ranking among search results. Bot operators try to impersonate known good bots to avoid being blocked.

- Cookie dropping: Advanced bot detection methods rely on cookies for bot classification and keeping session state variables. Furthermore, web applications often offer page requests without a cookie a ‘first request grace’ in which the page content would be served along with a ‘Set-Cookie’ response header. Bot operators take advantage of that first request grace by never presenting a cookie and tricking the application into treating every request as if it were coming from a new user.

Then, bot detection techniques can be divided into two main categories: static and dynamic.

Static Bot Detection

Static detection uses a combination of different methods, all of which inspect HTTP traffic statically. For example:

- Classify and categorize bots based on their User-Agent header value.

- Identify anomalies within HTTP message format and values, which are indicative of bots.

- Identify bots that mimic legitimate web browsers based on HTTP discrepancies.

Mimicking commonly used browsers and known good bots by manipulating the content of an HTTP request is fairly trivial and easy to do. This is why more advanced techniques for detecting bots should be employed on top of static bot signatures.

Dynamic Bot Detection

- Web session-based detections (e.g. cookie and redirection support)

- Javascript-based browser impersonator detections such as device fingerprinting or anomalies detected in the client’s environment

- Captcha challenges

Handling Detected Bots

Detecting and classifying bots is only the first step in managing bots. The second step is to decide how to handle every type or class of detected bot. Different actions for handling bots include:

- Logging bot activity, but taking no other action.

- Preventing the bot from accessing the web application.

- Banning the bot for a certain period of time.

- Displaying a CAPTCHA challenge to distinguish humans from suspected bots.

Prisma Cloud Bot Protection

Prisma Cloud now offers bot protection as part of our Web Application and API Security (WAAS) module. The new features include customizable bot detection and classification capabilities, allowing customers to manage bot risks with granular controls and visibility.

Customers leveraging WAAS can quickly and effortlessly set up their desired protection policy while enjoying best of breed features:

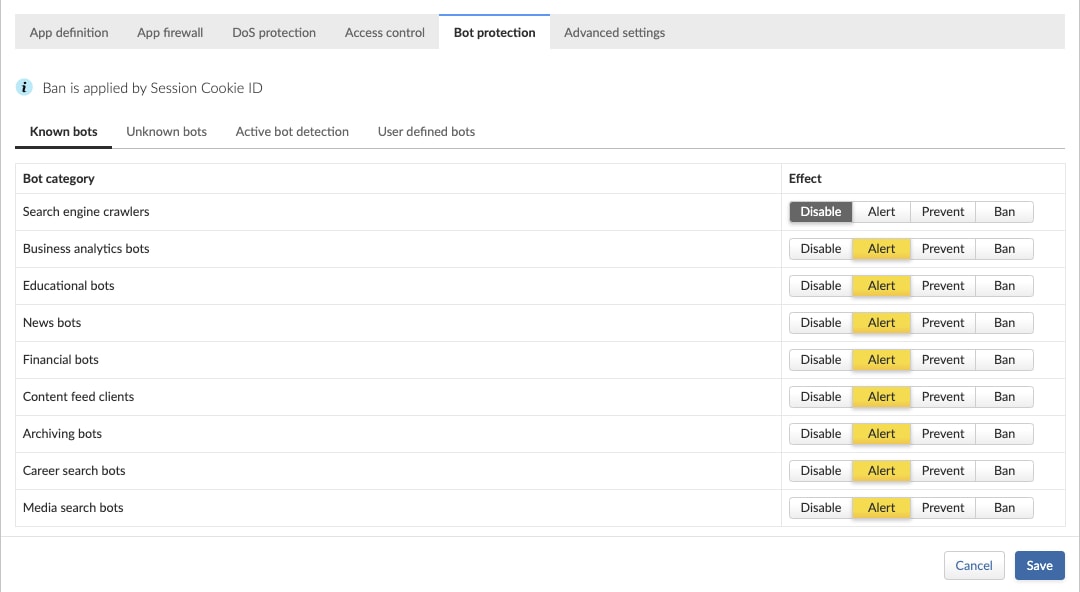

Static Detection and Classification of Known Bots

WAAS provides nine main known-bot categories, which cover hundreds of different known bots. For each category, users can choose the preferred action to be taken upon detection – disable, alert, prevent or ban.

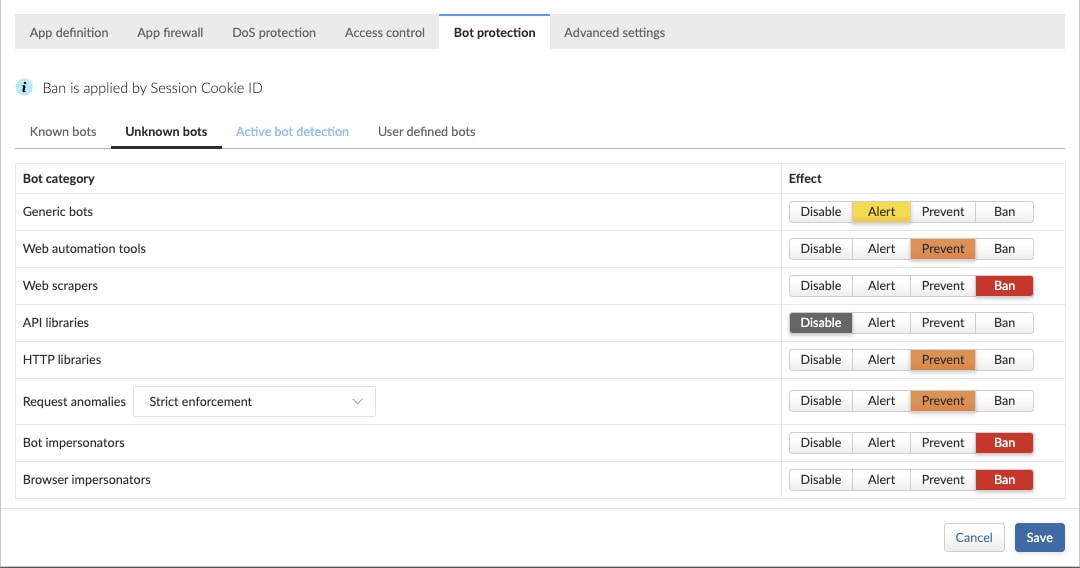

Static Detection of Unknown Bots

Not all bots can be classified based on their intent. For example, HTTP requests which originate from web development frameworks or command line tools such as CURL or WGET. For these types of bots, WAAS provides a range of additional static detection methods.

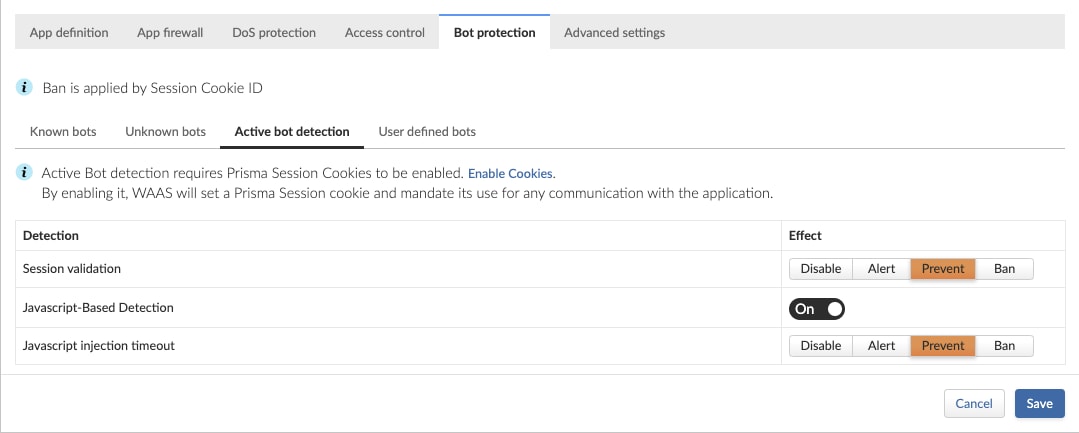

Active Detections for Sophisticated Bots

Since sophisticated bots can leverage automated headless browsers or simply mimic browser behavior, WAAS offers active bot detection tactics, which make use of web session cookies, redirection checks, interstitial pages, client-side fingerprinting and more.

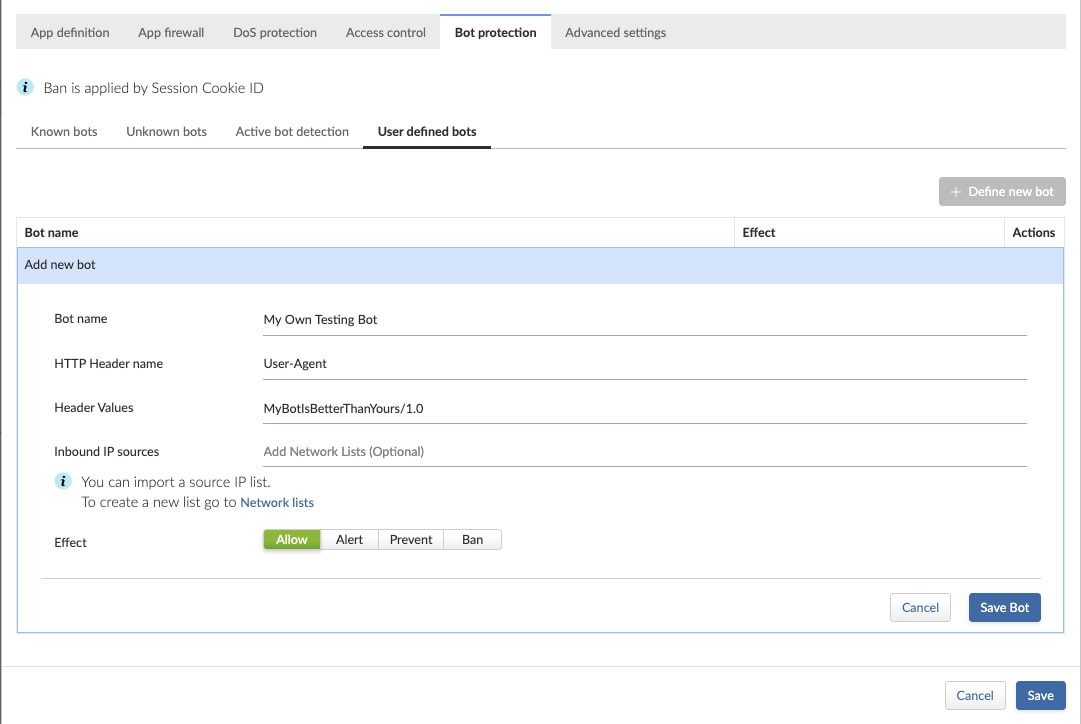

User-Defined Bot Rules

As some customers may require the ability to define their own relevant bot rules, WAAS provides an easy way of doing so, through the ‘User-Defined Bots’ configuration.

The following table maps WAAS bot detections and how they should be applied:

Bot Type |

WAAS Bot Detections |

| Users own scripts and automations | User-Defined Bot Rules |

| Known Good Bots | Static Bot Detections |

| HTTP Libraries

Automation Scripts |

Static Bot Detections |

| Headless Browsers | Active Bot Detections |

| Browser Impersonators | Active Bot Detections |

| Bot Impersonators | Active Bot Detections |

| Cookie Droppers | Active Bot Detections |

Viewing and Analyzing Bot Traffic

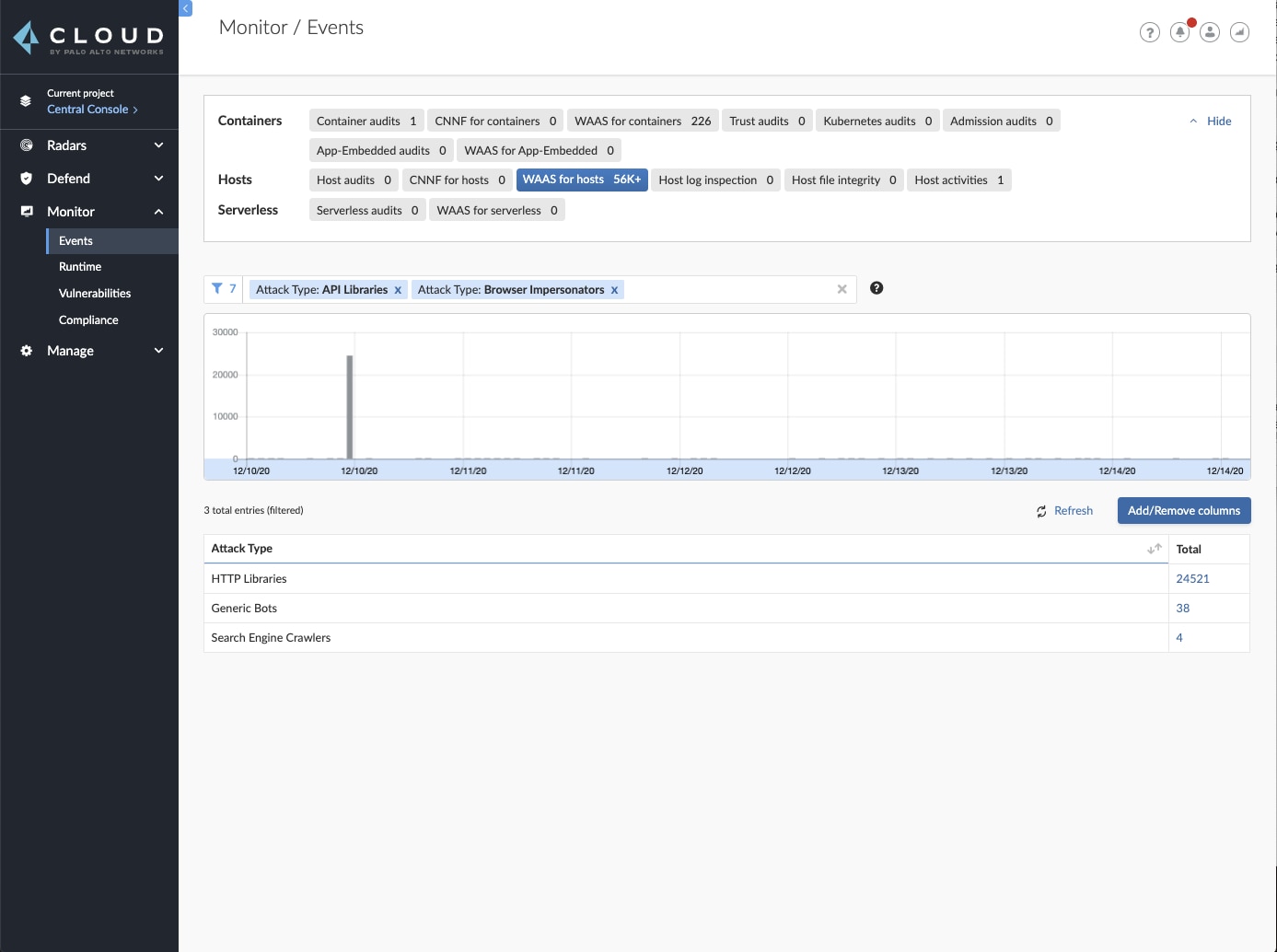

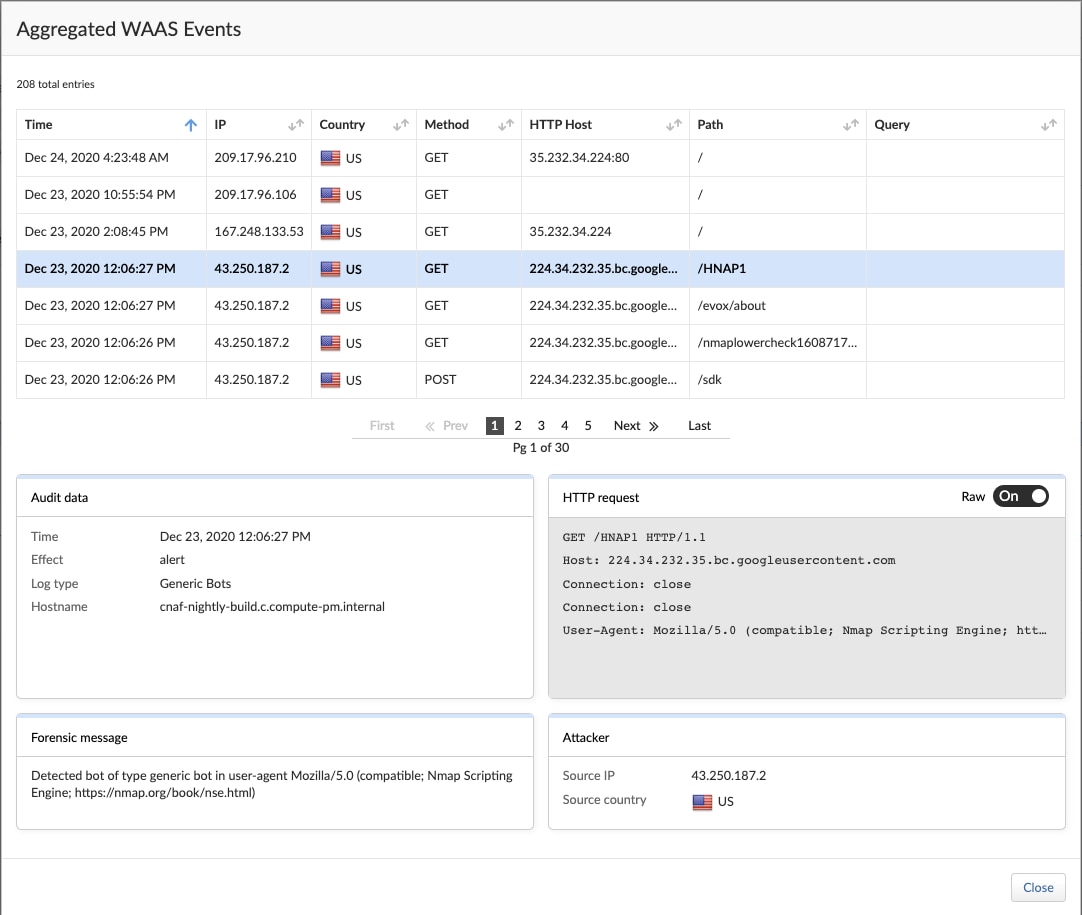

Using the new WAAS event analytics screen, users can browse thousands of security incidents and locate different bot-related events using the different provided filters.

Once filters are applied, users can aggregate events by various data dimensions and adjust filters in order to better understand the nature of the incident, its scope and attack characteristics. Next, users can explore individual events, and observe all information about HTTP traffic, forensics and source origin of the bot.

Begin Using Bot Protection

Bot protection will soon be available to all WAAS users. To learn more about the WAAS module, download our latest technical whitepaper, Raising the Bar on Web Application and API Security.

And to learn more about the latest updates to Prisma Cloud, check out our 2021 Virtual Summit, Building a Scalable Strategy for Cloud Security. The 90-minute event explores ways to shift your organization’s approach to cloud native app security, with best practices from organizations that have successfully navigated the challenges.