The Model Context Protocol (MCP), introduced by Anthropic in November 2024 to connect AI models to data, has taken off in recent months. Countless companies have announced new integrations based on this protocol, culminating in OpenAI’s March announcement that it too will adopt MCP. For organizations struggling to keep up with the protocol’s security implications, here’s a concise overview of what you need to know.

MCP — the Simple-as-Possible Explanation

For many newer AI implementations (such as agentic AI), MCP enables the AI system to interact with external systems, such as databases, files, web services and development tools. We would typically refer to this as tool use, functions or something similar. MCP is meant to standardize communication between the AI system and these external systems.

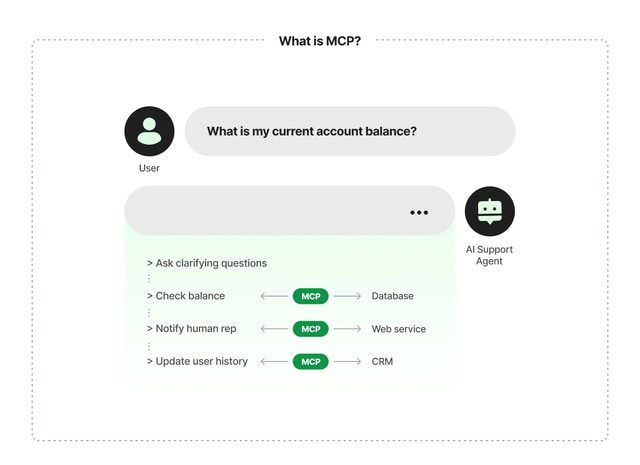

For example, let’s think of an AI support agent for a financial institution. To answer a customer’s query, such as “What is my current account balance?”, the agent might be instructed to ask for more details, fetch the balance from a database or notify a human agent in the company’s customer support platform. Afterward, the agent will log the conversation in the company’s CRM. The last three actions in this example require interaction with an external system, and that’s where MCP comes into play.

As depicted in figure 1, the MCP connection defines how data is retrieved from the database containing the account balance and sets expectations for the format in which the data arrives. The MCP server might also contain a repository of prompts that would add context and guardrails to the model responses. When a specific action is required, MCP would translate agent requests such as “check balance" into API calls executed against the external system via an MCP server.

As long as the underlying AI model (e.g., Anthropic’s Claude) supports the MCP standard, anyone can develop an MCP server, since communication with external systems is still handled via “traditional” APIs such as HTTP. In the example above, it could also be via the chatbot developer who builds the MCP server.

In practice, however, many companies now offer their own MCP server to more readily integrate their systems into AI-powered workflows. In fact, hundreds of MCP servers are available via GitHub or mcp.so, with new ones added daily.

Given the purpose of this article, we’ll suffice with the above overview of how MCP works rather than delve into various MCP concepts.

What Are the Security Risks Associated with MCP?

Since the MCP standard is new, Palo Alto Networks Unit 42 (and undoubtedly other security research teams) is still probing its full security ramifications. With that said, several risks are immediately evident.

1/ Prompt Injection

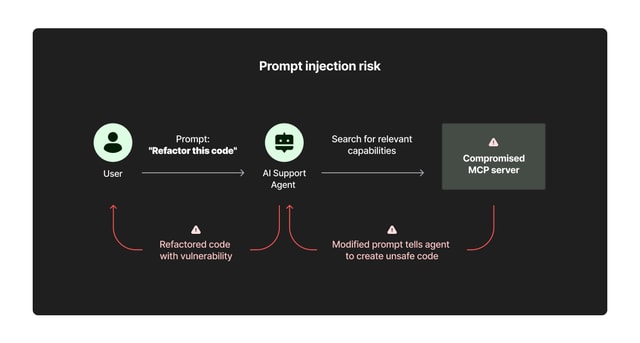

MCP servers contain prompt repositories used to augment instructions given to AI agents. While these data assets are meant to improve or contextualize model behavior, they could be misused to cause an AI actor to behave in undesired, unexpected or malicious ways.

For example, an MCP server could contain prompts that instruct a coding agent to write insecure code or to ignore certain types of user requests. Alternatively, an MCP prompt could instruct the agent to perform actions such as modifying database records without the user’s permission.

2/ Credentials Exposure

As mentioned, an MCP server’s main purpose is to facilitate connections with external systems. These connections require credentials such as API keys. While the MCP’s current implementation requires servers to run locally, this setup still represents another instance of credential storage that can lead to exposure.

What’s more, an MCP server will often request broad permission scopes to provide flexible functionality, while the same server might require permissions to multiple external services. Centralized storage of multiple sensitive credentials should remain of interest to security teams.

3/ Unverified Third-Party Tools

With hundreds of MCP servers available from the internet, any developer can download one from their CLI. Doing so, however, will enable bad actors to attack the supply chain. The inherent design of MCP servers to transmit data to external services makes the situation risky, particularly in conjunction with “set it and forget it” workflows explicitly encouraged by developers of agentic AI tools.

An MCP server could, for example, impersonate an official integration with a cloud database via typosquatting to exfiltrate the organization’s data and send it to the attacker. The attack could also be achieved through more complex, harder-to-detect methods, perhaps involving multiple tool calls chained together, with each tool receiving instructions the user might not see or grasp. Interesting research about this attack vector has been published by Invariant Labs.

Best Practices You Should Implement

Log All Application Prompts

Implement comprehensive logging for all prompts sent to MCP-enabled AI systems. The record will allow security teams to audit interactions, detect potential prompt injection attempts and establish baseline behaviors.

Establish Governance Procedures for New MCP Servers

- Create a formal approval process for adding new MCP servers to your environment, including security reviews, source verification and documentation.

- Maintain an inventory of approved servers.

- Consider establishing an internal repository of vetted MCP servers rather than allowing direct installation from public sources.

Monitor Exposed API Keys in MCP Configuration Files

- Use secret scanning tools to identify potential leaks in configuration files.

- Use environment variables or dedicated secret management solutions instead of hard-coded credentials when possible.

- Ensure that keys have the minimum necessary permissions to reduce the impact of potential exposure.

Stay Tuned

Today's overview describes the state of affairs as of June 2025. But, as with everything related to AI, the technology is radidly evolving, making security a moving target. As more integrations and MCP implementations appear in the wild, the risk landscape is likely to change. For the time, your best bet is to stay informed and ensure you have a holistic view of cloud and AI security.