AI is driving the future by allowing for rapid innovation at an unprecedented scale to gain competitive advantage. Organizations have already made the movement from being “AI curious” to “AI forward.” But this speed comes with the infamous “AI black box” problem. How do you successfully build and deploy your offerings that are based on AI models whose risks are based on deep embedded logic and nondeterministic behavior?

Trust becomes an important catalyst to speed. Your ability to move with speed and absolute trust demands a robust, continuous security strategy.

Discover, Assess, Protect as Part of the AI Security Lifecycle

Your success in the AI race depends on addressing the security challenge proactively. We champion a simple, three-phase framework for AI Security, designed to empower you to deploy AI bravely: Discover, Assess and Protect.

- Discover: You cannot assess the risk of what you cannot see. You must have a comprehensive, real-time inventory of all AI models, inference datasets, applications and AI agents across your entire enterprise to understand your full attack surface.

- Assess: You cannot protect against the risks you have not identified. Confidence in the world of AI starts with thorough, scalable and contextual assessments of both the model artifact and its runtime behavior.

- Protect: Finally, you must use these insights to deploy actionable security controls that neutralize threats and help maintain compliance across your ecosystem.

Prisma AIRS 2.0 is the unified platform that, along with a thorough discovery and a robust runtime protection, delivers the critical second pillar, the quintessential comprehensive assessment capability you need to move forward.

The Dual Challenges of AI Risk Assessment

The core challenge in AI security is the infamous "black box" problem. Traditional security operates by looking for known signatures and predictable execution paths, like a specific file hash or a buffer overflow attempt. AI systems are fundamentally different; their risks are based on hidden logic vulnerabilities (flaws in the training data or architecture) and response behaviors (unintended, harmful outputs).

Traditional security would treat an AI model as a static asset, ignoring the fact that its greatest risks lie within its complex, embedded logic, and in the case of generative models, their nondeterministic behavior. This is why a dedicated AI security assessment capability is essential.

Effective AI assessments must therefore solve for two distinct, yet interconnected, layers of risk:

- AI Model Security: Securing the integrity of the model artifact itself, which comprises the entire AI supply chain, from training data to compiled weights.

- AI Red Teaming: Securing the behavior of the model or app in its deployed context, addressing the immediate runtime exposure to adversarial inputs.

Prisma AIRS®, as a unified solution, delivers these capabilities and more, giving CIOs an actionable overview of their AI risk posture, from the core artifact to the live application behavior.

AI Model Security Protects the AI Supply Chain, One Model at a Time

Your AI models are now among your most valuable pieces of intellectual property, often representing millions of dollars in investment and years of proprietary data curation. However, the modern MLOps workflow often relies heavily on third-party and open-source models, which is a necessary speed multiplier that simultaneously creates supply chain vulnerabilities on an unprecedented scale.

The Risk of a Malicious AI Model

A model imported from a public repository or a partner may harbor hidden threats that can lead to major security incidents. This can range from embedded malware and Trojan models to hidden backdoors that facilitate IP exfiltration or allow an attacker to gain arbitrary code execution once the model is loaded into your sensitive environment. If your model's integrity is compromised, everything built on top of it is fundamentally flawed.

Prisma AIRS AI Model Security

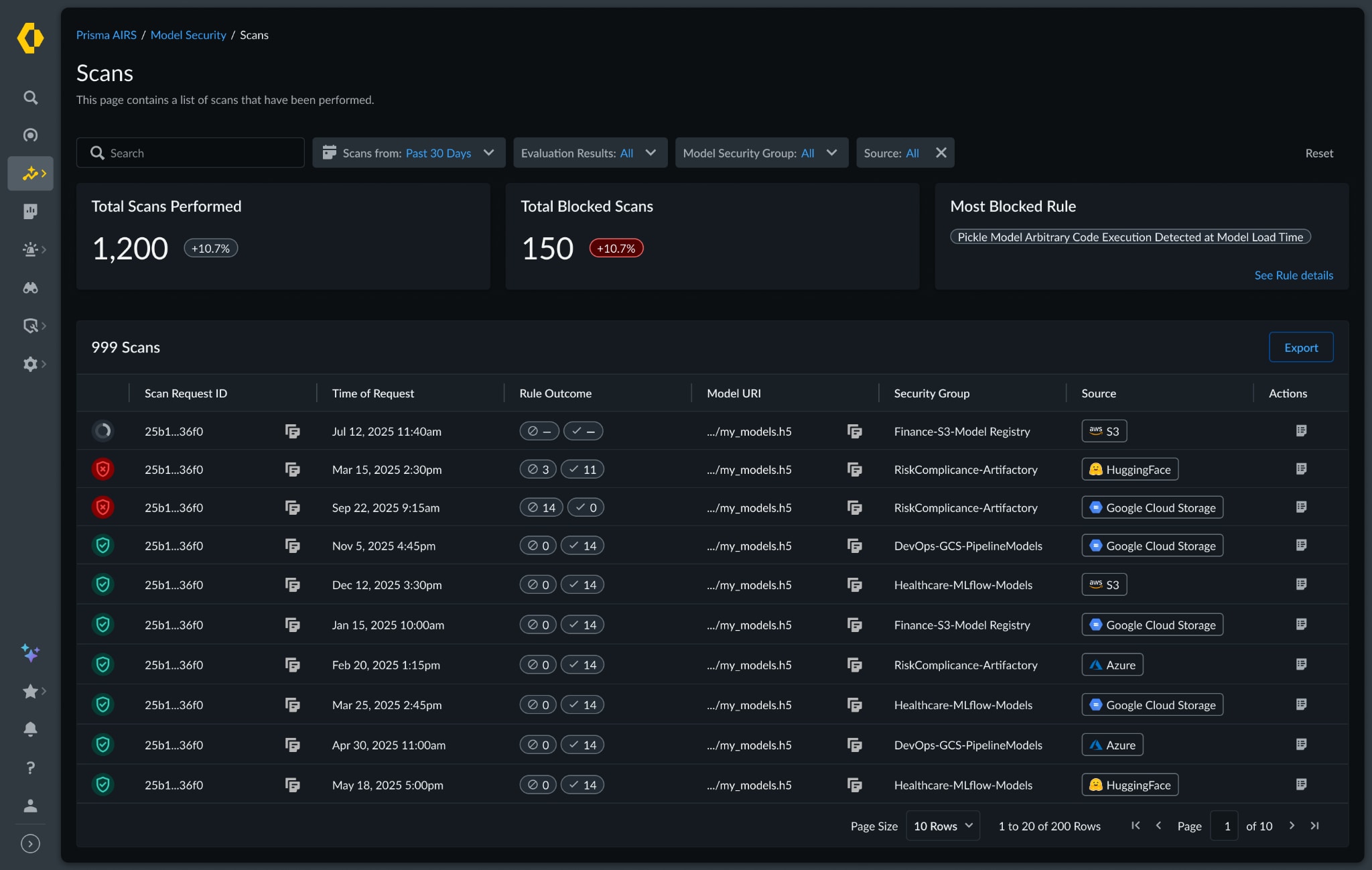

Prisma AIRS delivers AI Model Security by moving inspection left, into the CI/CD pipeline and model registry. It is purpose-built to look deep inside the model artifact, inspecting the complex structure of the model weights, metadata and dependencies of over 35 model formats (including PyTorch, TensorFlow, Keras, etc.) for architectural weaknesses and explicit threats.

Verified Provenance and Threat Intelligence: Prisma AIRS AI Model Security provides critical, curated intelligence, leveraging the collective power of Unit 42 and the 19,000+ members of Huntr community. Prisma AIRS goes beyond file scanning to check the provenance and integrity of open-source components, dependencies and complex data formats that often travel alongside the model, flagging components associated with known AI-specific vulnerabilities.

Prisma AIRS AI Model Security, by way of this deep integration and predeployment inspection, is designed to ensure the integrity of the core model asset before it reaches production, thereby neutralizing threats that traditional code and vulnerability scanners would inevitably miss.

Don’t fall into the Guardrail Trap: Watch our latest webinar to learn about the risks you can face if an AI model within your AI ecosystem has hidden vulnerabilities.

AI Red Teaming Is Turning Behavioral Risks Into Actionable Governance

Even a perfectly clean, validated model artifact can be exploited through prompt injection or behavioral manipulation once it is deployed. The speed of AI development and adoption requires continuous, scalable testing. Unfortunately, manual red teaming exercises are slow, expensive and quickly become outdated against the ever-evolving threats targeting AI systems. Human testers simply cannot generate the volume and variety of adversarial examples required to secure continuous delivery pipelines.

The Risk of Malignant AI Behavior

Behavioral risks, where an AI produces harmful, illegal or toxic outputs, are among the highest-impact that threat executives must manage. These failures can arise from sophisticated jailbreaks and insecure output handling that return malicious code or denial-of-service vectors. Because large language models are nondeterministic, rare edge cases can and will occur even under normal usage. Left unchecked, they expose the organization to financial loss, data leakage, regulatory fines and severe reputational damage. That’s why continuous, systematic red teaming (testing both intended workflows and adversarial edge cases) is essential.

Autonomous, Context-Aware AI Red Teaming

Prisma AIRS automates this critical function, providing continuous security testing that scales with your deployment velocity. This is security at the speed of AI.

- Dynamic Attack Simulation: Prisma AIRS AI Red Teaming deploys a dynamic, conversational AI Red Teaming Agent that mimics the behavior of a real-world attacker. The agent adapts its attacks based on the application's response, using combinations of advanced techniques like obfuscation, role playing and attempted system prompt extraction to truly stress test the system's defensibility.

- Comprehensive, Contextual Coverage: The platform executes over 500 distinct, real-world attack scenarios, including data exfiltration, generating harmful content and manipulating system prompts. Crucially, these scenarios can be customized to the application’s business context to provide meaningful and high-fidelity insights.

- Governance and Actionability: Prisma AIRS AI Red Teaming provides an aggregated, CIO-friendly risk score and findings report for your entire AI application portfolio – a single, quantifiable metric for the board. Furthermore, every discovered vulnerability is mapped directly to key governance frameworks, including the OWASP Top 10 for LLMs and the NIST AI Risk Management Framework. This mapping is vital, as it allows security teams to demonstrate auditable compliance.

AI Red Teaming with Prisma AIRS delivers an auditable, prioritized list of vulnerabilities that AI teams can focus on. Faster and more proactive identification can aid in faster remediation, thereby enabling organizations to demonstrate trust to regulators and customers.

Red Team Your Systems Before Attackers Do: Watch the webinar to find out why AI red teaming is an essential part of securing your AI deployments.

Converting Uncertainty to Measurable Confidence

Executives across every industry are pushing their teams to move from curiosity to full-scale deployment, and the winners of this AI race will be those who move with speed and with absolute trust. However, pursuing AI innovation without a foundational security strategy is simply accepting unacceptable financial and reputational debt. Stitching together point solutions is only a short-term fix.

By delivering AI Model Security and AI Red Teaming in a single unified platform, Prisma AIRS is designed to be an effective answer to the AI black box problem, turning uncertainty into measurable confidence at scale.

Secure your AI foundation. Secure your future. Deploy Bravely.

Contact us today to learn more about how Prisma AIRS can help you solve the AI Black Box problem.